Human speech has become a system of communication requiring coordination of voice, articulation and language skills. Voice is produced by the movement of air which vibrates through the vocal cords.

Vocal chords are made of elastic connective tissue covered by folds of membrane. The pitch of your voice is controlled by the modification of the muscles that shorten the cords pulling them tight for high pitch and slack for low pitch.

Articulation is the modification of airflow. Speech is articulated by the movements of the tongue, lips, lower jaw and soft palate. Vowels come from voiced excitation of the voice tract. Articulators are kept static and all sound radiates from the mouth. Nasals are generated when the tongue is raised and the soft palate is lowered which reduces the oral passage. Plosives are generated when the vocal tract is closed by the thr tongue or lips and then the pressure built up is suddenly released. Fricatives are formed by partially restricting the vocal tract by the tongue or lips.

...Refined Notes on ....

Monday, 24 December 2012

The Moving Image and Video

The first moving image was introduced to the television in 1926 by John Logie Baird. This was based on the old idea of Persistence of Vision (PoV). PoV is the theory that the eye has a retention of an image of about 1/25th of a second and therefore if a secondary image was viewed before this persistence ends then the observer would not notice the change of images.

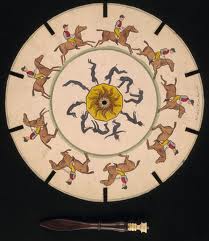

The first moving image was introduced to the television in 1926 by John Logie Baird. This was based on the old idea of Persistence of Vision (PoV). PoV is the theory that the eye has a retention of an image of about 1/25th of a second and therefore if a secondary image was viewed before this persistence ends then the observer would not notice the change of images.The image shows an early toy called the Zeotrope which created the illusion of motion. This was invented in 180AD and then a modern version was reinvented in 1833. The viewer looked through the slits and because of the speed at which the images were rotating it created the illusion of motion despite it actually being a series of still images.

This theory is now regarded as the myth of persistence of vision. The more plausible theory now is that there are two distinct perceptual illusions in phi phenomenon and beta movement.

Bitrate is the word used to describe how many bits are required to show a video per second. This can vary from as low as 300kbps sor a low quality video, upto 8000kbps for a high quality video.

Interlacing is a way of getting the best results from a low bandwidth. It is done by alternating showing the odd numbered lines on the television first, then the even numbered lines of the next image at a speed of 25 frames per second. This gives the illusion that the images are moving.

Resolution is the number of pixels a televison can display. When analogue TV was used, typical resolution was 352x288 in Europe. Comparitively, modern televisions can display as many as 1920x1200. DVD quality is typically 720x576 for European televisions, while Blu-Ray and HD provide the highest resolution.

File Format

MPEG-1 - First designed in 1988 was the first decoder and could compress a video to about 26:1 and audio 6:1. Designed to compress vhs quality raw data with minimum loss in quality.

MPEG-2 - The improvement to MPEG-1 and is the format of choice for digital television broadcasters. It actually developed as early as 1990 before MPEG-1 was released.

MPEG-4 - Integrates many features of previous versions while adding support for 3-D rendering, Digital Rights management and other interactivity.

Quick Time - Appeared in 1991 created by Apple almost a year before Microsofts equivalent software.

It can handle playback from VLC and MPlayer both which are PCLinuxOS based.

AVI - Appeared in 1992 by Microsoft. A problem with this format was that it could appear stretched or squeezed during playback however the players such as VLC and MPlayer solved most of these problems. But a massive advantage of it is that it can be played back on almost every player and machine making it second only to MPEG-1.

WMV - Made by microsoft using several codecs and is used for BluRay discs.

3GP - Used for CDMA phones in the US. Some phones us MP4.

FLV - Used to deliver video over the internet and is used by YouTube, Google Video, Yahoo Video, Metacafe and other news outlets.

Monday, 17 December 2012

Intro to Cool Edit Pro

CoolEditPro is a piece of software which allows you to view a sound file in its digital format and then allows you to edit this wave. You can choose from which point you want to listen to the sound file or even select a specific section to play by highlighting the section.

The wave can be manipulated in various different ways such as copying, pasting and trimming the whole wave or selected parts of it. Additionally you can add other waves onto or into the file to extend it. When the wave is open it is possible to change the data about the wave being displayed on the screen such as the time, time signature or changing the scales to allow more accuracy.

It is also possible for the user to zoom in and out of the wave to allow greater detail and closer selections. Other affects available to the user are things such as reverse and invert which change the pattern of the wave and therefore how the wave sounds. The amplitude can be changed which changes the volume of the sound file and can also be faded to allow quieter sections or merges of two sounds. Other features are things such as delay, reverb, echo etc.

The wave can be manipulated in various different ways such as copying, pasting and trimming the whole wave or selected parts of it. Additionally you can add other waves onto or into the file to extend it. When the wave is open it is possible to change the data about the wave being displayed on the screen such as the time, time signature or changing the scales to allow more accuracy.

It is also possible for the user to zoom in and out of the wave to allow greater detail and closer selections. Other affects available to the user are things such as reverse and invert which change the pattern of the wave and therefore how the wave sounds. The amplitude can be changed which changes the volume of the sound file and can also be faded to allow quieter sections or merges of two sounds. Other features are things such as delay, reverb, echo etc.

Looking at Light

The concepts of looking, seeing and observing are very different. The definitions of looking is readying yourself to see something, seeing is when the eye actually receives light energy and converts it into impulses and observing is when we analyse, interpret and decide what the nerve impulses are i.e. object or an effect.

On cloudy days light appears white however it has a bluish tint. The clouds actually act in such a way that they soften the light and diffuse it, spreading it out.

Front lighting occurs when the light source is behind the person observing the object which evenly lights the object. Side lighting creates strong shadows which emphasizes texture and adds depth to an image. The most dramatic lighting though is back lighting meaning the light source is behind the object creating silhouettes and other interesting effects.

On cloudy days light appears white however it has a bluish tint. The clouds actually act in such a way that they soften the light and diffuse it, spreading it out.

Front lighting occurs when the light source is behind the person observing the object which evenly lights the object. Side lighting creates strong shadows which emphasizes texture and adds depth to an image. The most dramatic lighting though is back lighting meaning the light source is behind the object creating silhouettes and other interesting effects.

Light

Light is defined as a form of energy detected by the human eye and has been observed to be able to travel as both streams of particles and in waves. Light is different from sound, sound needs a medium to pass through in outer space in order to travel, where as light can travel directly from the sun through the vacuum of space in order to reach earth.

Light travels as a transverse wave much like a ripple on the surface of the water. It travels at right angles both up/down and right/left from the point of origin. Light works the same as radio waves, each colour is a different frequency and the eye acts like a radio receiver. The frequency range is 400THz (RED) to 750THz (VIOLET).

Light Travels at 300000000 m/s in a vacuum, it slows down a little in air and slows to 2/3rds in glass. The slowing off light in glass makes that material useful for lenses. The frequency is the vibrations per second and the frequency of the lightwave is independent of the medium which its travelling in. The same lightwave would have a different wavelength because the speed of light is slower in glass.

White light is made up of every colour of the spectrum though some colours are more prominent than others.

Above is shown the light spectrum of visible light and then both extremes of wavelength which the eye cannot detect. Each light source has a different reaction to each frequency of different colours. Waves which have wavelengths of longer than 700 nm are infrared and those with wavelengths shorter than 400nm are ultraviolet. 50% of sun energy is visible to us on the earths surface and about 3% is ultraviolet leaving the rest being infrared.

Monochromatic light (single colour) is uncommon however is used in street lighting. Under this type of lighting the world appears to be in shades of grey and completely colourless.

Brightness is measured in candela and lumen by scientists but photographers use light exposure meters that have been set up by scientists. A candela is the power emitted from the source in a particular direction. The units of candela emitted from a standard candle is 1 candela. A lumen is the total amount emitted from a source irrespective of the the ability of the eye to see it.

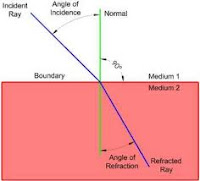

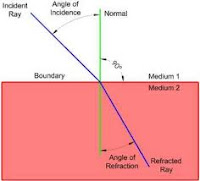

Light waves once emitted from the source are transmitted, reflected, absorbed, scattered and refracted. Transmitted light is the same as emitted light, it simply describes the act of the source producing light waves. Reflected light is when light bounces off of a solid object i.e. a mirror. The angle of reflection is the same as the angle the light hits the surface (angle of incidence).

The reflection shown in the diagram is know as SPECULAR reflection however there is also DIFFUSE reflection which happen when the light waves contact a rough surface. The light can reflect in many directions which is known as Scattered light. Absorbed light is light waves that come into contact with a solid surface but do not reflect. Instead they are absorbed and disappear. Refraction takes place when light waves pass into a transparent object and then when they pass back out the other side. It is basically the act of the light bending. When the light waves pass into and out of the medium they speed up or slow down depending on

the speed of light in that particular medium. This explains why it is difficult to see things that are submerged in water and why they appear bent, because the light is bending as it passes from travelling through water to travelling through air to reach the observers eye.

the speed of light in that particular medium. This explains why it is difficult to see things that are submerged in water and why they appear bent, because the light is bending as it passes from travelling through water to travelling through air to reach the observers eye.

The Inverse Square Law states that the intensity of the light at a point is directly proportionate to the square of the distance from the light source.

Light travels as a transverse wave much like a ripple on the surface of the water. It travels at right angles both up/down and right/left from the point of origin. Light works the same as radio waves, each colour is a different frequency and the eye acts like a radio receiver. The frequency range is 400THz (RED) to 750THz (VIOLET).

Light Travels at 300000000 m/s in a vacuum, it slows down a little in air and slows to 2/3rds in glass. The slowing off light in glass makes that material useful for lenses. The frequency is the vibrations per second and the frequency of the lightwave is independent of the medium which its travelling in. The same lightwave would have a different wavelength because the speed of light is slower in glass.

White light is made up of every colour of the spectrum though some colours are more prominent than others.

Above is shown the light spectrum of visible light and then both extremes of wavelength which the eye cannot detect. Each light source has a different reaction to each frequency of different colours. Waves which have wavelengths of longer than 700 nm are infrared and those with wavelengths shorter than 400nm are ultraviolet. 50% of sun energy is visible to us on the earths surface and about 3% is ultraviolet leaving the rest being infrared.

Monochromatic light (single colour) is uncommon however is used in street lighting. Under this type of lighting the world appears to be in shades of grey and completely colourless.

Brightness is measured in candela and lumen by scientists but photographers use light exposure meters that have been set up by scientists. A candela is the power emitted from the source in a particular direction. The units of candela emitted from a standard candle is 1 candela. A lumen is the total amount emitted from a source irrespective of the the ability of the eye to see it.

Light waves once emitted from the source are transmitted, reflected, absorbed, scattered and refracted. Transmitted light is the same as emitted light, it simply describes the act of the source producing light waves. Reflected light is when light bounces off of a solid object i.e. a mirror. The angle of reflection is the same as the angle the light hits the surface (angle of incidence).

The reflection shown in the diagram is know as SPECULAR reflection however there is also DIFFUSE reflection which happen when the light waves contact a rough surface. The light can reflect in many directions which is known as Scattered light. Absorbed light is light waves that come into contact with a solid surface but do not reflect. Instead they are absorbed and disappear. Refraction takes place when light waves pass into a transparent object and then when they pass back out the other side. It is basically the act of the light bending. When the light waves pass into and out of the medium they speed up or slow down depending on

the speed of light in that particular medium. This explains why it is difficult to see things that are submerged in water and why they appear bent, because the light is bending as it passes from travelling through water to travelling through air to reach the observers eye.

the speed of light in that particular medium. This explains why it is difficult to see things that are submerged in water and why they appear bent, because the light is bending as it passes from travelling through water to travelling through air to reach the observers eye.The Inverse Square Law states that the intensity of the light at a point is directly proportionate to the square of the distance from the light source.

Wednesday, 28 November 2012

Week 7 Lab 4

The aim of this Lab was to take a sound file that I downloaded from moodle and improve the quality of said sound clip. After downloading the wave and opening it in Cool Edit Pro, I began to investigate ways to improve it.

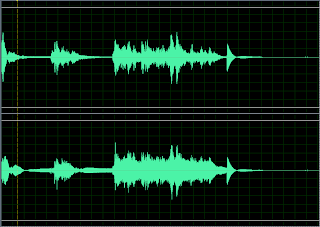

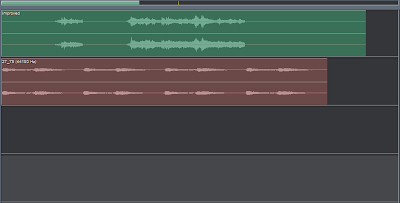

This image shows the final improved sound Wav after a series of small changes. Firstly, I applied a notch filter which I set at 440Hz and 2dB. I also added DTMF Lower tones at 100dB and DTMF Upper tones at 2dB. I then targeted and zoomed in on the spoken sections, the peaks, and I amplified those sections by 10.98dB. I did attempt to amplify them by more, however, it causes 'White Noise' i.e. a loud continuous noise. Finally, I used the hiss reduction function to clean up the over all quality of the sound.

The second part of the Lab was to edit the .wav to make the speaker sound angry. This was more difficult than the first part of the task and took longer.

I achieved this part of the task by making the start of each word louder but also by gradually increasing the volume of the sentence. The resulting wave of the below steps is shown on the right. Firstly, I individually selected the first letter of each word and increased its amplitude by on 1dB, which was enough to make those letters noticeably louder and more hard hitting. Then by selecting the entire wave I added a gradual amplitude change of 2dB from the start of the sound through to the very end of the spoken section of the file.

I achieved this part of the task by making the start of each word louder but also by gradually increasing the volume of the sentence. The resulting wave of the below steps is shown on the right. Firstly, I individually selected the first letter of each word and increased its amplitude by on 1dB, which was enough to make those letters noticeably louder and more hard hitting. Then by selecting the entire wave I added a gradual amplitude change of 2dB from the start of the sound through to the very end of the spoken section of the file.Section 3 of the Lab was actually very easy despite it sounding quite difficult. The task was to take the already edited .wav and make it sound as if it was being recorded in a church hall. I edited the improved sound file. Under the reverberation option I could select Church reverb, so I applied this to the entire wave. I then added a bit of amplification to boost the volume slightly after the reverb made the sound a quieter.

The final part of this lab was to add church bells in the distance. I started with the completely edited .wav (shown in green) and I then found an extra .wav of a church bell (shown in red). I duplicated the sound file to double its length so that it spanned the length of the initial sound file. I amplified the church bell .wav by -10dB so that it was quiet in comparison to the other .wav as it was meant to be outside the church that the man is standing in.

The final part of this lab was to add church bells in the distance. I started with the completely edited .wav (shown in green) and I then found an extra .wav of a church bell (shown in red). I duplicated the sound file to double its length so that it spanned the length of the initial sound file. I amplified the church bell .wav by -10dB so that it was quiet in comparison to the other .wav as it was meant to be outside the church that the man is standing in.

Thursday, 8 November 2012

Hearing Lecture

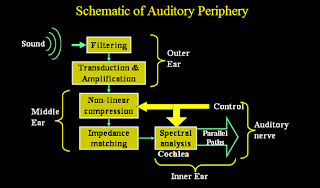

The Middle Ear - The sound heard travels down your ear canal to the ear drum causing it to vibrate. The vibration by the ear drum is transferred through small bones called Ossicles, and this vibration causes the Cochlea to vibrate. The vibrations actually reduce power loss in the sound wave and once at the Cochlea is transferred to the fluid medium in the Cochlea. Loud noises/sounds create excessive amounts of vibration in the Ossicles which can damage hearing, therefore, there is a neuro-muscular feedback system in your ear that helps to protects your hearing from damage.

The Inner Ear - Vibrations in the oval window creates waves in the cochlear fluid which in turn causes the cochlear perform a spectral analysis. Sensor cells cause neurons in the auditory nerve to react. The timing, amplitude, and frequency information is taken to the auditory brain stem where neural processing takes place.

The Inner Ear - Vibrations in the oval window creates waves in the cochlear fluid which in turn causes the cochlear perform a spectral analysis. Sensor cells cause neurons in the auditory nerve to react. The timing, amplitude, and frequency information is taken to the auditory brain stem where neural processing takes place.

Malleus - a small hammer shaped bone also known as an ossicle which is part of the middle ear.

Stapes - Transmits the sound vibrations from the incus to the cochlea.

Incus - Connects the Malleus to the Stapes.

Scala Vestibuli - filled cavity inside the cochlea that conducts the vibrations to the scala media.

Scals Media - Also know as Cochlea Dust, it is also a filled cavity inside the cochlea.

Scala tympani - function is the same as scala vestibuli and is to transduce the movement of the air that casue the vibrations in the ossicles.

Organ of Corti - This section contains the auditory sensory cells. which in turn connect to the brain. This is only found in mammals.

Impendance Matching is a very important mechanism in the middle ear. It transfers vibrations ceated by the sounds your hear from the large tympanic membrane to the smaller oval window in the middle ear. The reason this is vital is that the oval window is low impedance and this is needed as high impedance vibrations of the cochlear fluid will reduce the energy transmitted dramatically to 0.1%.

Auditory Brainstem

The main features of auditory brainstem processing are that there is a two channel set of time domain signals in contiguous, non linearly spaced frequency bands; There is separation of the left from the right ear signals; low from high frequency information; timing from intensity information; re-integration and re-distribution at various specialised processing centres; binaural lateralisation; binaural unmasking; listening in the gaps and channel modelling.

Subscribe to:

Comments (Atom)